Many of our previous activities involved using an objects reflectance, its interaction with a light source, to do color segmentation, white balancing, and even classification. In this activity we will again use the this phenomenon to reconstruct a 3D surface. The method we will be implementing is called photometric stereo.

To give us a better understanding of our method it is best to backtrack a little. Let us consider a camera, such as those we have benn using in our previous activities. Esentially, the image obtained by a camerais simply the light intensity, I, reflected by its subject which is dependent on the materials reflectance. However aside form its reflectance this intensity pattern is also dependent on the geometry of the object which results in light and dark (shadow) areas. This is much more understood by looking at figure 1, where we can see that the reflected intensity at point P is affected by the angle at which the light hits the surface, resulting to either a bright area and/or a shadow. Hence an intesity profile of an object also contains information on its geometry. Equation 1 gives an expression for this intensity where rho is the reflectance and k is a scaling parameter.

Looking at our model on figure 1 and equation 1, it becomes apparent that we should also take into account the type or our light source. That is, point sources, line sources, and area sources would result in different reflected intensity patterns since the light coming from them would arrive at the surface differently (different angles and intensities as a function of distance). However in this activity we limit ourselves to an approximation that our point source is at infinity which results to equation 1 having the form

Since we know the intensity profile from our image, if we have information on the location of the light source we can obtain the geometry of the surface by solving for the normal vector n.

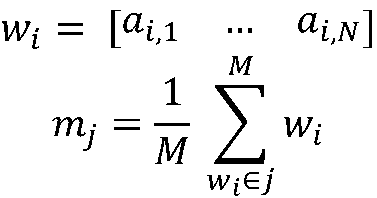

In this method, photometric stereo, we will be using multiple images of the same object but with light sources at different locations to obtain an estimate of an objects 3 dimensional geometry. Since we have images for different light source locations we can rewrite equation 2 in matrix form where each row corresponds to a image (intensity profile) light source pair. Solving for the normal vector n we obtain

We also know that this normal vector n is related to the surface c by the taking the gradient of c such that

Finally we obtain the surface f(x,y) by integrating

We implement this algorithm in Scilab and make use of matrix properties and operation to reduce the computation time.

Figure 2 shows the raw images we used to calculate the 3D surface. We used 4 images with sources at different locations from the matlab file photos.mat. It is easily seen from this images that indeed there is a noticeable difference with different light source position.

Figure 3. 3D plot of the calculated surface of the 3D object. Errors are seen as spikes and dips over the surface

Figure 3. 3D plot of the calculated surface of the 3D object. Errors are seen as spikes and dips over the surface

Using the algorithm we discussed and the raw images shown in figure 2 we reconstruct a hemispherical surface shown in figure 3. The resulting surface we obtained is in good agreement with our expectation. Notice that the shape is indeed that of a sphere and the background remains flat. However we also see that the surface is very rough and there is even a gentle dip in a the shape of a cross over the hemishpere. These imperfections is inherent to our method, since it is still only an approximation. A much better result may be obtained by having more images (hence more information resulting to a better approximation). Still overall we can say that the method is a success and is fairly simple to implement.

I give myself a grade of 10 in this activity. I thank Dr. Gay Pereze for her patience and useful adice.

Main Reference

A17– Photometric Stereo by Dr. Maricor Soriano, 2008

To give us a better understanding of our method it is best to backtrack a little. Let us consider a camera, such as those we have benn using in our previous activities. Esentially, the image obtained by a camerais simply the light intensity, I, reflected by its subject which is dependent on the materials reflectance. However aside form its reflectance this intensity pattern is also dependent on the geometry of the object which results in light and dark (shadow) areas. This is much more understood by looking at figure 1, where we can see that the reflected intensity at point P is affected by the angle at which the light hits the surface, resulting to either a bright area and/or a shadow. Hence an intesity profile of an object also contains information on its geometry. Equation 1 gives an expression for this intensity where rho is the reflectance and k is a scaling parameter.

Looking at our model on figure 1 and equation 1, it becomes apparent that we should also take into account the type or our light source. That is, point sources, line sources, and area sources would result in different reflected intensity patterns since the light coming from them would arrive at the surface differently (different angles and intensities as a function of distance). However in this activity we limit ourselves to an approximation that our point source is at infinity which results to equation 1 having the form

Equation 2.

Since we know the intensity profile from our image, if we have information on the location of the light source we can obtain the geometry of the surface by solving for the normal vector n.

In this method, photometric stereo, we will be using multiple images of the same object but with light sources at different locations to obtain an estimate of an objects 3 dimensional geometry. Since we have images for different light source locations we can rewrite equation 2 in matrix form where each row corresponds to a image (intensity profile) light source pair. Solving for the normal vector n we obtain

We also know that this normal vector n is related to the surface c by the taking the gradient of c such that

Finally we obtain the surface f(x,y) by integrating

We implement this algorithm in Scilab and make use of matrix properties and operation to reduce the computation time.

Figure 2. Images of the surface taken with 4 different location of the light source. Their respective locations were previously provided.

Figure 2 shows the raw images we used to calculate the 3D surface. We used 4 images with sources at different locations from the matlab file photos.mat. It is easily seen from this images that indeed there is a noticeable difference with different light source position.

Figure 3. 3D plot of the calculated surface of the 3D object. Errors are seen as spikes and dips over the surface

Figure 3. 3D plot of the calculated surface of the 3D object. Errors are seen as spikes and dips over the surfaceUsing the algorithm we discussed and the raw images shown in figure 2 we reconstruct a hemispherical surface shown in figure 3. The resulting surface we obtained is in good agreement with our expectation. Notice that the shape is indeed that of a sphere and the background remains flat. However we also see that the surface is very rough and there is even a gentle dip in a the shape of a cross over the hemishpere. These imperfections is inherent to our method, since it is still only an approximation. A much better result may be obtained by having more images (hence more information resulting to a better approximation). Still overall we can say that the method is a success and is fairly simple to implement.

I give myself a grade of 10 in this activity. I thank Dr. Gay Pereze for her patience and useful adice.

Main Reference

A17– Photometric Stereo by Dr. Maricor Soriano, 2008