Our final activity is again about image restoration. However unlike the previous activity, where we tackled images with additive noise, this time we will be restoring blurred images. Basically a blurred image is due to the effect of a degradation function to a given image. Let us also remember that in our earlier activities we have already encountered blurred images as a result of convolving apertures or filtering in the Fourier space. In this activity we will only be focused on blurred images caused by uniform linear motion with an additive gaussian noise.

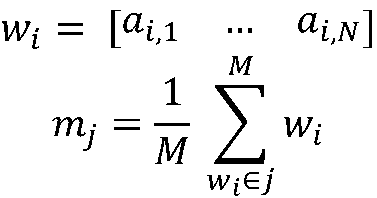

Whatever the degradation process maybe, it can always be modeled as a convolution of the image and the degradation function such that in inverse space we obtained a blurred image given by

Whatever the degradation process maybe, it can always be modeled as a convolution of the image and the degradation function such that in inverse space we obtained a blurred image given by

where H is the transfer function of the blurring process and N is the additive noise. For this activity we will be using the known degradation function of a uniform linear motion blur (equation 2). In equation 2, parameters a and b are the total displacements along x and y directions respectively and T is the total exposure time.

Just like in our previous activity the restoration process involves applying a filter or operation to obtain an estimate of the image from the degraded one. This time we will be using the Weiner (minimum mean square error) filter which incorporates both the degradation function and the statistics of the additive noise. The application of this filter operates in inverse space and is given by

where Sn and Sf are the power spectra of the noise and the ideal (undegraded) image. However, in real life situations it is highly improbable for us to have information on both the additive noise and the ideal (undegraded) image. For such cases equation 3 will be approximated into

where Sn/Sf is replaced by a constant K.

In this activity we use equations 1 and 2 on a gray scale image (figure 1) to produce a blurred image. Then we restore this blurred image using equation 3 or equation 4 with different values of K. We also do all of these for different gaussian noise strengths.

Figure 2. Blurred and restored image for different values of K, T, a, and b. We set the additive noise to be equal to zero

Figures 2 shows our results if we only have an image that is motioned blurred and no additive noise is present. From these images we clearly see that decreasing K improves the quality of our restoration. Furthermore, at the extreme case when K = 0 we arrive at a perfect restoration. These results are actually expected if we analyze equations 1 and 4. Doing simple math, at K = 0, equation 4 reduces to equation 5.

Equation 5

And since in this case we set the additive noise to zero, the last term in equation 5 vanishes and we are left with a perfect restoration.

In figure 3 we show the results of restoration when we consider the blurred image with an additive gaussian noise. Again similar to the case when there is no noise having a small value of K gives a very good quality of restoration. However, in this case if we further decrease K we then start to obtain dark noisy images. And, unlike the case of no noise, at K = 0 we obtain a meaningless restored image (almost pure noise).

One more notable result shown here is that even by using the exact power spectra of the noise and the original image, as in equation 3, we still do not obtain a perfect reconstruction. The restored image is indeed a significant improvement compared the blurred image but it is still not perfect.

I give myself a grade of 9 in this activity. The results we have obtained were very convincing but still left some questions unanswered. I would like to thank Master, Jaya, Orly, and Thirdy for helping me realize that I was wrong.

Main reference

A19 – Restoration of blurred image, AP186 2009

(click to enlarge)

Figure 3. Blurred and restored image for different values of K, T, a, and b. Noise strength is sigma=0.001

Figure 3. Blurred and restored image for different values of K, T, a, and b. Noise strength is sigma=0.001

In figure 3 we show the results of restoration when we consider the blurred image with an additive gaussian noise. Again similar to the case when there is no noise having a small value of K gives a very good quality of restoration. However, in this case if we further decrease K we then start to obtain dark noisy images. And, unlike the case of no noise, at K = 0 we obtain a meaningless restored image (almost pure noise).

One more notable result shown here is that even by using the exact power spectra of the noise and the original image, as in equation 3, we still do not obtain a perfect reconstruction. The restored image is indeed a significant improvement compared the blurred image but it is still not perfect.

I give myself a grade of 9 in this activity. The results we have obtained were very convincing but still left some questions unanswered. I would like to thank Master, Jaya, Orly, and Thirdy for helping me realize that I was wrong.

Main reference

A19 – Restoration of blurred image, AP186 2009